|

BRHS /

Human FactorsHuman factors and safetyIn the context of technology and safety, the term ‘human factors’ refers to factors that involve humans and that have an impact on safety. The involvement of humans in a technological setting comprises humans as planners and operators (that is, professional engagement with technology) and as users (typically non-professional users, clients or customers). In any technological application, the contribution of humans is to some degree paradoxical: humans contribute essentially to maintaining safety not only in the design phase but also in the operational phase by controlling processes; but, at the same time, humans also make errors and thereby create dangerous situations and sometimes accidents. The various estimates of how often human error is the primary causal factor in industrial and transport vary somewhat, but typically range between 50% and 90%. Organizational factors may be involved in active human errors and will typically be categorized as poor/lacking procedures, training, man-power planning etc. or sometimes more global shortcomings such as poor safety culture. Human errors and organisational failuresThe modern view of human error among safety specialists is that while human error is unavoidable the circumstances that prompt human errors or allow us to capture them before they lead to negative outcome are to a large extent controllable. This view of human error has in large part been shaped by Rasmussen and Reason each of whom has promoted the “systems view” of human error. Rasmussen advocated on a general level the idea that human error is human-system mismatch. His so-called SRK-framework (skill, rule, knowledge) has been used widely in for analysing human error and was subsequently further developed by Reason. Rasmussen’s three levels of performance essentially correspond to decreasing levels of familiarity or experience with the environment or task.

Rasmussen’s skill-rule-knowledge framework for classifying performance relates essentially to a cognitive classification of how familiar we are with tasks and, hence, at which level of conscious effort and attention we are devoting to our tasks. These distinctions between different levels of performance are important and useful, because they allow us

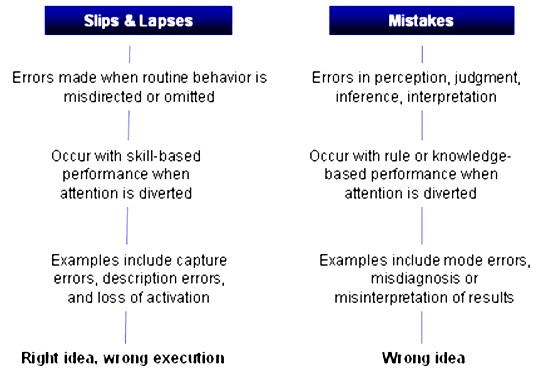

Reason adapted Rasmussen performance based model, tying the classification of errors to cognitive processing. An often used definition of human error is the following taken from the domain of medicine but based on Reason’s work focusing originally on industrial safety: An error is defined as the failure of a planned action to be completed as intended or the use of a wrong plan to achieve an aim.

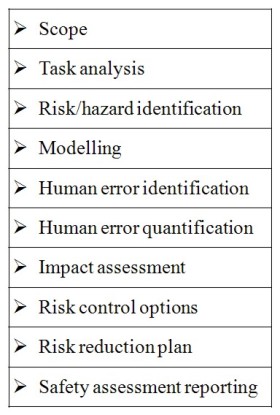

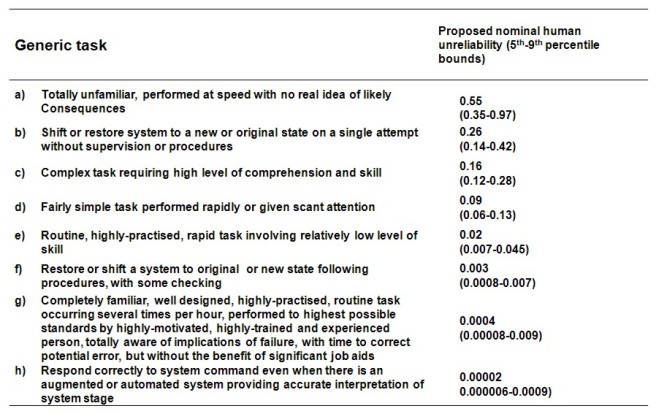

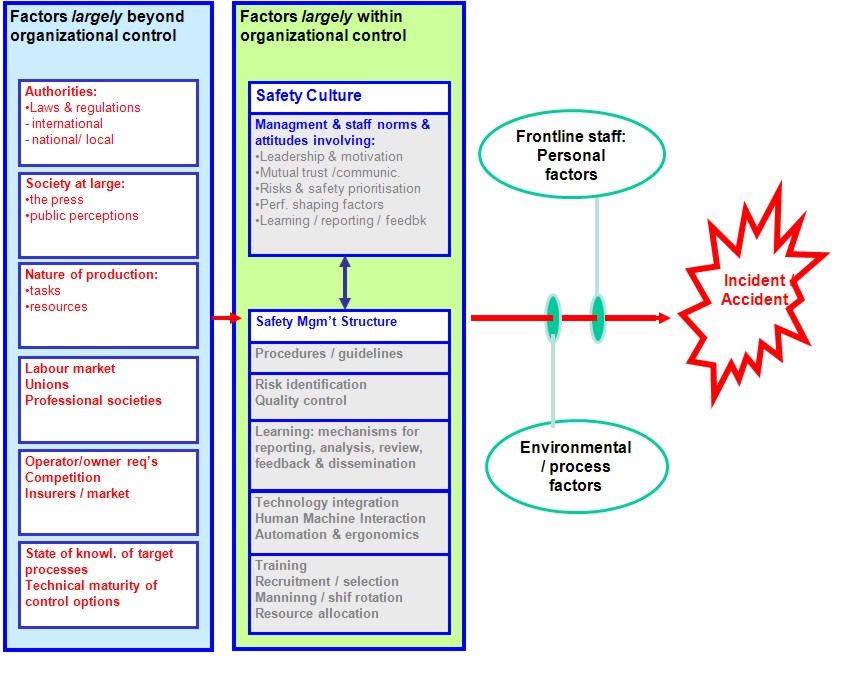

An error is thus either a failure for form a suitable plan (wrong intention) or a failure to carry out one’s plan. The latter Reason classified as lapses and slips, depending on whether it is memory failure (lapse) or a response failure (a slip). The diagram below shows Reason’s categories and how they expand upon the SRK-framework.  Human Reliability Assessment (HRA)In his well-known textbook on HRA methods, Kirwan emphasizes that one of the primary goals of human reliability analysis is to provide a means of properly assessing the risks attributable to human error. To achieve this aim three overall phases must be carried out: (A) Identifying what errors can occur. (B) Deciding how likely the errors are to occur (C) Enhancing human reliability by reducing this error likelihood (Human Error Reduction) Thus, HRA is used to identify, model, predict, and when possible, to reduce human errors in operations and will typically include normal production operations, maintenance, testing and emergency conditions. It is well-known that maintenance and testing are phases are especially vulnerable to human error that can seriously influence system safety . For instance through insertion of an incorrect component, miscalibration, failure to align the system back to its operational configuration. A number of methods and techniques are available with which to perform a structured analysis of human reliability of a specific industrial setting in which an HRA is undertaken. A common preliminary phase for conducting an HRA is, when the scope of the problem and the exercise to be undertaken has been defined, to perform a risk assessment. This may be carried out with techniques described in the section above on hazard identification methodologies, e.g., fault and event trees. Next, it is customary to define the interaction between humans and the system in terms of a task analysis - e.g., hierarchical or time-line task analysis. The next step involves the identification of possible errors, possibly by using group-based techniques such as HAZOP, as described above. Following the identification of errors, it is necessary to estimate the likelihood of their occurrence. To arrive at a quantification of human error probabilities either human error databases can be used or expert judgment or a mixture of both. In the table overleaf we show an illustration of human error quantification from a commonly used HRA method. Finally, risk reduction options must be reviewed and possibly a prioritization, selection and a plan for implementation of risk reduction.  Generic classifications (HEART, after Williams, 1986)  Safety cultureIt has become widely accepted that an organisation’s safety culture can have an impact on safety performance. Two installations may be entirely alike in terms of production, ownership, workforce, procedures and yet differ in terms of safety performance and measurable safety climate .When seeking to assess and control the impact of human factors on the level of risk of a plant or other installation the area it is therefore essential to include organizational factors as well. The diagram overleaf seeks to depict how safety cultural factors along with traditional work condition factors (here called safety management factors) are within the control of the organization at hand. In this section we review briefly how safety culture is conceptualised in the safety analysis literature and how it may be measured. The concept of safety culture was introduced in the aftermath after the nuclear power plant accident in Chernobyl in 1986, when the concept was invoked to explain a corporate attitude and approach that tolerated gross violations and risk taking behaviour. A large number of  studies have since developed models and measures of safety culture. One of the most widely cited definition of safety culture was offered by the Advisory Committee on the Safety of Nuclear Installations in the UK (ACSNI, 1993), who defined safety culture as follows: The safety culture of an organisation is the product of individual and group values, attitudes, perceptions, competencies and patterns of behaviour that determine the commitment to, and the style and proficiency of, an organisation’s health and safety management. Organisations with a positive safety culture are characterised by communications founded on mutual trust, by shared perceptions of the importance of safety and by confidence in the efficacy of preventive measures (ACSNI, 1993).

The first-generation models characterised positive safety culture for a given organisation as founded on mutual trust, shared belief in the importance of safety and shared belief that preventive measures make a difference. In subsequent models (from mid-90s and later) there is additionally an emphasis on organisational learning: i.e., willingness and ability to learn from experience (errors, incidents and accidents). There is no “standard model” of safety culture, but there is widespread agreement that safety culture includes factors relating to

Measuring safety culture / climate

A distinction is often made between culture and climate: culture is slow to change and involves mostly tacit (unspoken, hard or impossible to articulate) beliefs and norms, whereas climate is shaped by context and more explicit. Therefore, empirical studies, and especially surveys and interviews, are usually said to uncover, at best, safety climate, whereas safety culture is only characterised indirectly. When considering empirical approaches to safety climate, decisions must be made about the following three dimensions: (a) what should be measured (what are the factors to be measured?) (b) how should measures be made (what are the methods and techniques to perform the measurement?) (c) where should measures be made (in which part of the organisation should sampling and appraisal be made?) As described above, the issue of what should be measured is addressed in somewhat different but not necessarily incompatible ways by different analysts. There is general but not precise agreement about the factors that are involved in safety climate [culture]. Similarly, different methods and techniques are available for assessing safety climate – interviews, field observations, participation, surveys. But within this range of methods, surveys (written or web-based questionnaires, oral interview surveys) yield a uniform output that may be quantified more or less directly. Finally, assessment of safety climate in an organisation may be targeted at both the management level (top and middle management including supervisors) and the shop floor level, and at different operational units and even office staff and planners. Taking the range of choices into account, the most typical kind of safety climate [culture] assessment method is that of questionnaire-based surveys of staff perceptions and attitudes. A questionnaire-based survey will typically be targeted at the operational staff, including possibly group leaders, and, of course, will use some form of safety climate questionnaire – a safety climate assessment tool. There are a large number of such tools (questionnaires) available, some of these being domain specific (maritime, oil production platforms, aviation, process industry etc). Questionnaires require the respondent to answer specific questions in terms of the selection of one among a fixed set reply options – typically a selection from a Likert-type ranking scale: “Strongly Agree, Agree, Neither Agree Nor Disagree, Disagree, or Strongly Disagree”. Question items will form groups that correspond to underlying factors (say, perception of top management commitment to safety). For validated survey tools, factors will have been established through possibly pilot surveys and subsequent statistical analysis (for instance, factor analysis). Finally, analysts will be able to establish a benchmark when survey tools that have been applied to a range of installations or workplaces within comparable parameters. For instance, the EU-project ARAMIS, adapting a construction and production plant questionnaire, has used this to collect data from five European Seveso-type plants. On the ARAMIS approach (Duijm et al. 2004) , a single global safety climate index has been established for use in integrated assessment of safety management – instead of a range of different indices, each corresponding to a single safety climate factor. supplier and delivery organisationsGAPS &Recent progress:<< Modelling as a Tool for Quantitative Risk Assessment | Content | Residual Risk and Social Perception of Hydrogen >> |